EU AI Act: regulatory transformation for the smart nutrition ecosystem

The entry into force of the EU AI Act on August 1, 2024, marks a historic moment for global technology regulation. This revolutionary legislation is not simply a new set of rules but lays the foundation for a radical transformation of how artificial intelligence is integrated into European food and nutrition systems. The impact extends from technological innovation to consumer protection, from industrial competitiveness to scientific excellence, outlining a future in which AI technology serves humanity while respecting European values of transparency, accountability, and sustainability.

Alessandro Drago

The Regulatory Framework: A Compliance Architecture for the Digital Era

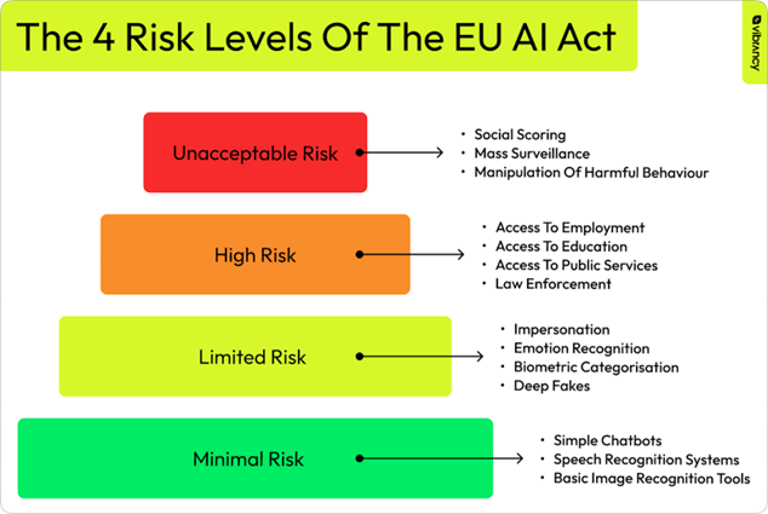

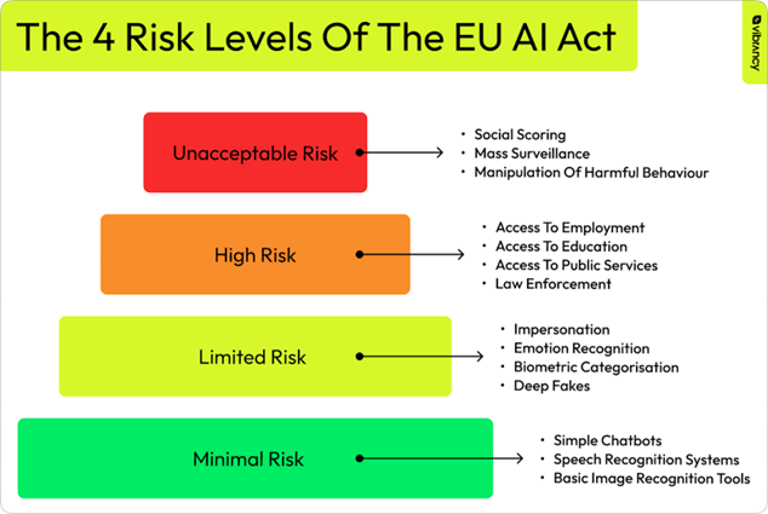

The EU AI Act represents the world's first comprehensive legislation on artificial intelligence, introducing an innovative risk-based approach that classifies AI systems into four main categories. This regulatory structure is not merely punitive, but is designed to foster responsible innovation by creating an environment of trust for consumers, businesses, and institutions.

Risk Classification and Sectoral Implications

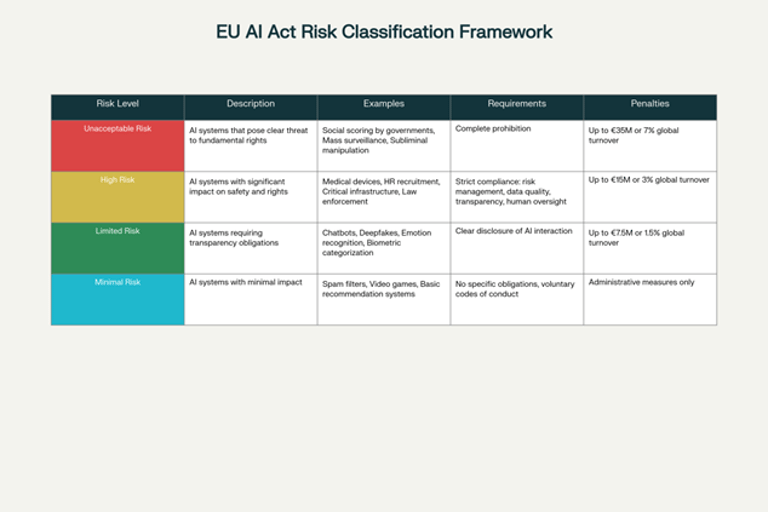

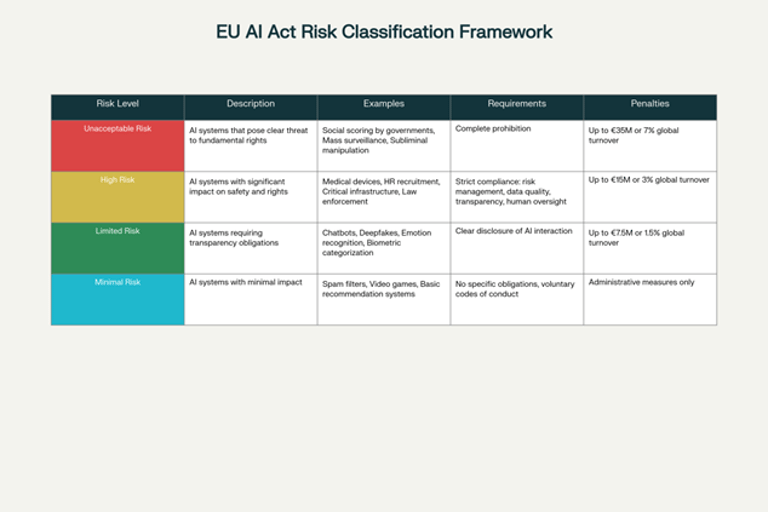

The EU AI Act's risk-based classification system creates a gradual framework that recognizes the diversity of AI applications in the food and nutritional sector. Minimal risk systems, which include most AI-enabled filtering applications and games, do not entail specific obligations but can voluntarily adopt additional codes of conduct. These systems represent the foundation of the AI ecosystem, providing support functionalities without directly influencing consumers' critical decisions.

AI Risk Classification Framework According to EU AI Act

Systems with specific transparency risk, such as nutritional chatbots or food recommendation systems, must clearly inform users of their artificial nature. This category is particularly relevant for the nutrition industry, where many consumer-facing applications use AI to provide personalized advice. The required transparency is not only a matter of compliance, but becomes an element of competitive differentiation and trust building.

High-risk systems include many health and nutritional applications that can directly influence consumers' health decisions. These systems must comply with rigorous requirements including risk mitigation systems, high-quality datasets, clear information for users, and human supervision. For the personalized nutrition industry, this means implementing rigorous scientific validation protocols and ensuring that AI recommendations are always supported by solid evidence.

Unacceptable risk systems, such as "social scoring" systems, are completely banned. Although less directly relevant to the nutritional sector, these prohibitions establish clear ethical principles that inform responsible development of all AI applications.

The Governance Architecture: Providers and Deployers

The EU AI Act introduces a fundamental distinction between providers and deployers of AI systems, each with specific obligations that reflect their role in the AI value chain. This division is particularly important in the nutritional ecosystem, where we often have technology companies developing generic AI platforms and food or supplement companies implementing them for their customers.

Providers are responsible for the design and development of AI systems, including implementing an AI Quality Management System that unifies documentation, conformity assessments, automated logs, corrective actions, post-market monitoring, and internal and external audits. For providers of AI nutritional technologies, this means establishing rigorous development processes that integrate scientific, ethical, and technical considerations from the earliest design phases.

Deployers, often nutritional companies using AI solutions developed by third parties, must ensure adequate levels of AI literacy among their staff, implement appropriate technical and organizational measures to ensure correct system use, manage input data quality, and suspend operation if the system does not function as expected. This distributed responsibility creates an accountability ecosystem that strengthens the safety and effectiveness of AI systems along the entire value chain.

The Implementation Timeline: A Strategic Roadmap

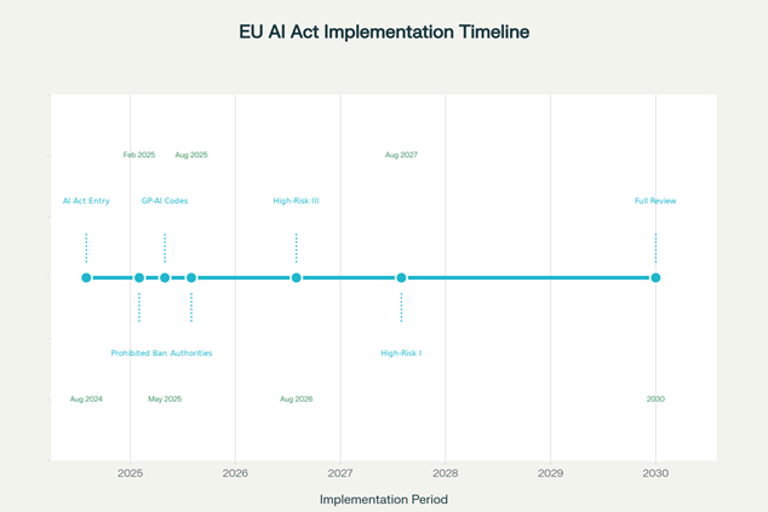

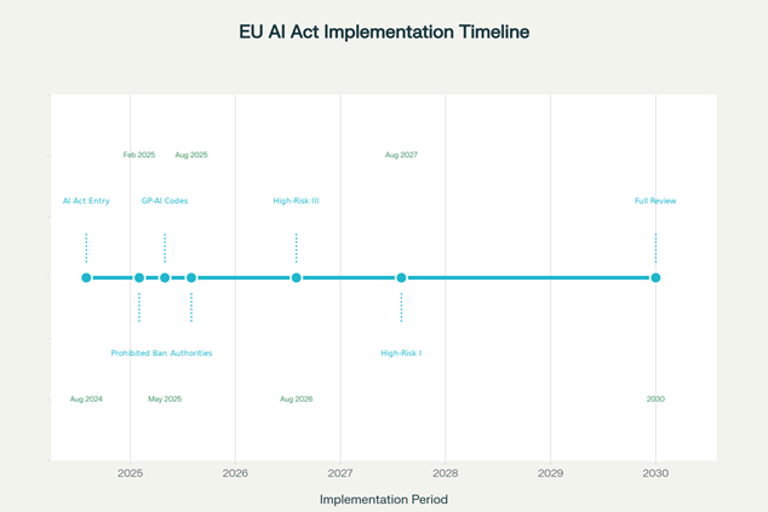

The EU AI Act implementation follows a staggered timeline that recognizes the complexity of industrial and technological adaptation. This gradual approach allows organizations to strategically plan their compliance investments and transform regulatory obligations into competitive opportunities.

The first phase, already in effect, concerns prohibitions on unacceptable risk AI systems. Although these prohibitions do not directly influence most nutritional applications, they establish important ethical principles that inform all subsequent AI development. By February 2025, companies must ensure compliance for all prohibited systems and begin preparation for subsequent phases. This period is crucial for nutrition organizations to evaluate their existing systems and identify which might require modifications to ensure future compliance.

The second critical phase arrives in August 2026, when obligations apply to high-risk AI systems specifically listed in Annex III. This category includes systems used in sectors such as biometrics, critical infrastructure, education, employment, access to essential public services, law enforcement, immigration, and justice administration. While not all these sectors are directly relevant to nutrition, many nutritional AI systems could be classified as high-risk if they significantly influence consumer health.

The final phase, in August 2027, extends obligations to Annex I AI systems and safety components of existing products. This is probably the most relevant phase for the nutrition industry, since many nutritional devices and applications could be classified as safety components of broader systems.

The Sanctioning System: Deterrence and Enforcement

The EU AI Act's sanctioning framework is structured on three progressive levels that reflect the severity of violations and create powerful incentives for proactive compliance. For the nutrition industry, understanding this system is essential for assessing risks and planning appropriate compliance investments.

The most severe sanctions, up to 35 million euros or up to 7% of annual worldwide turnover, are imposed for using prohibited AI systems. Although few nutritional AI systems risk falling into this category, the potential consequences are so severe as to require particular attention in system design.

The second level of sanctions, up to 15 million euros or up to 3% of worldwide turnover, applies for non-compliance with specific obligations of providers, deployers, and other operators. This level is particularly relevant for the nutrition industry, since many personalized recommendation systems could be classified as high-risk.

The third level, up to 7.5 million euros or 1% of worldwide turnover, concerns providing incorrect or incomplete information to competent authorities. This emphasizes the importance of transparency and accuracy in documentation and communication with regulators.

The AI Ecosystem for Food Safety: A Systemic Transformation

The modern food safety landscape requires unprecedented integration of technology, data, and regulatory compliance. The emergence of an AI ecosystem for food safety represents a systemic transformation that touches every aspect of the food chain, from production to distribution, from monitoring to crisis response.

The Technological Architecture of Integration

The technological architecture of the AI ecosystem for food safety is based on three fundamental components that must operate in harmony while respecting the EU AI Act regulatory framework. Multi-source data integration includes Intelligence of Things (IoT) sensors distributed along the supply chain, meteorological and environmental data, laboratory information, epidemiological surveillance data, and market intelligence.

AI orchestrates these diverse data flows, identifying correlations and patterns that would be impossible to detect manually. However, in the context of the EU AI Act, these systems must ensure data quality and representativeness, implement transparency mechanisms, and maintain appropriate human supervision.

Real-time predictive analysis uses advanced Machine Learning algorithms to identify emerging risks before they manifest as crises. These systems can predict foodborne disease outbreaks, chemical contaminations, quality problems, and supply chain disruptions weeks or months in advance. The challenge in the EU AI Act context is ensuring that these predictive systems are sufficiently explainable and that their predictions can be validated by human experts.

Blockchain and Supply Chain Transparency

The integration of blockchain technology with AI systems creates an unprecedented level of transparency and traceability in the food supply chain, perfectly aligned with EU AI Act transparency requirements. Blockchain provides an immutable record of transactions and product movements, while AI analyzes this data to identify anomalies and risks.

Recent studies demonstrate that consumers are willing to pay a significant premium for products with blockchain-based traceability, particularly for sensitive products like infant formulas. This willingness to pay reflects a growing demand for transparency that AI can help satisfy while respecting regulatory requirements.

The combination of blockchain and AI enables the creation of "digital twins" of food products that follow each product from production to consumption. These digital twins provide complete visibility of the product's history and enable rapid interventions in case of safety problems, while creating the documentation necessary to demonstrate compliance with the EU AI Act.

The Challenge of Trust and Credibility: Building Public Acceptance

Widespread adoption of AI in food systems depends critically on public trust, which must be built through transparency, reliability, and demonstrable results. The EU AI Act recognizes this challenge and incorporates specific requirements designed to build and maintain public trust.

The Trust Paradox of Supplement Users

A particularly interesting phenomenon emerges from consumer trust analysis in AI: supplement users demonstrate significantly greater trust in AI technology compared to non-users, with 64% viewing AI positively versus only 40% of non-users. This gap suggests that the supplement industry has a unique opportunity to lead in responsible AI adoption.

This differential trust can be attributed to several factors that the industry must understand and leverage responsibly. Supplement users tend to be more informed on health and nutrition topics, more open to new health technologies, and more oriented toward personalized solutions. Additionally, many supplement users already have experience with personalized recommendations based on questionnaires or tests, making the transition to AI-powered systems more natural.

Transparency and Disclosure Expectations

Consumer trust in AI is accompanied by high expectations for transparency and accountability that align perfectly with EU AI Act requirements. 87% of supplement users want AI involvement to be declared on product labels, compared to 79% of non-users. This demand for disclosure reflects a sophisticated understanding of AI's role and a desire for informed consent.

The nutrition industry has the opportunity to exceed minimum EU AI Act requirements, creating transparency standards that become a competitive advantage. This can include detailed explanations of how AI systems work, what data they use, and how recommendation decisions are made.

Research also shows that nearly two-thirds of supplement users are willing to allow AI to analyze their genetic makeup for personalized nutritional recommendations, representing nearly double the acceptance rate of non-users. This indicates significant openness toward more invasive AI applications when perceived benefit is high and transparency is guaranteed.

Market Opportunities and Growth Projections: The AI Nutrition Economy

The business case for AI in personalized nutrition continues to strengthen, supported by market projections showing exceptional growth potential and favorable demographic and technological factors. The EU AI Act, rather than slowing this growth, can accelerate it by creating an environment of greater trust and standardization.

Market Growth Dynamics

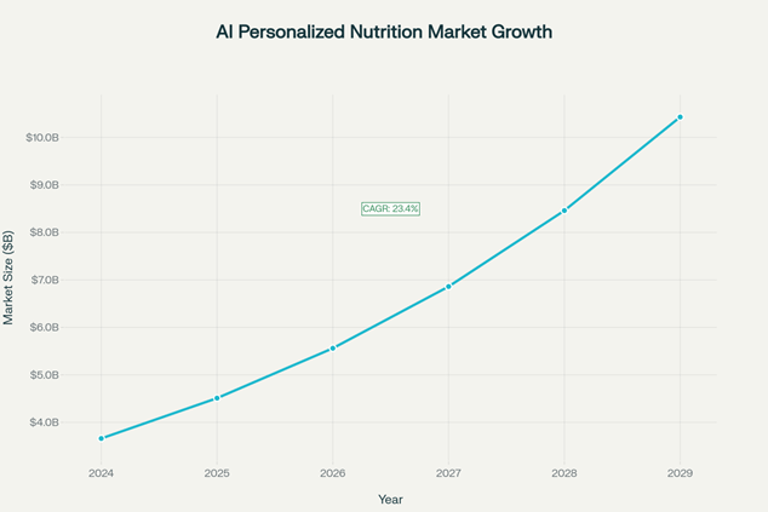

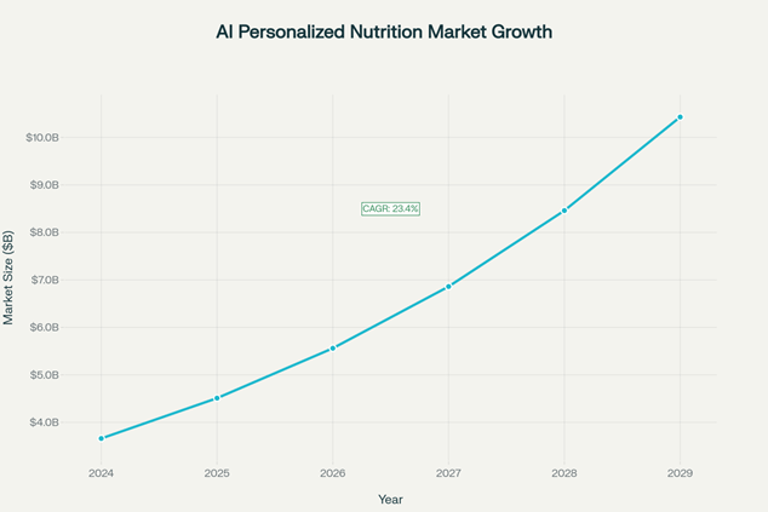

The global AI market in personalized nutrition is projected to grow from $3.66 billion in 2024 to over $10.43 billion by 2029, with a compound annual growth rate of 23.4%. This exceptional growth reflects the convergence of various technological, demographic, and social trends that the EU AI Act is designed to support and regulate.

Driving factors include increased investment in health tech, global population aging, increase in lifestyle-related diseases, wider use of AI for precision medicine, and increase in chronic conditions such as diabetes and obesity. These trends create a market increasingly receptive to personalized and evidence-based nutritional solutions, exactly the type of applications that the EU AI Act is designed to make safer and more reliable.

The Impact of Regulation on Growth

Contrary to initial concerns, the EU AI Act can accelerate market growth by creating an environment of greater trust and standardization. Clear regulation reduces uncertainty for investors and companies, facilitating long-term planning and investment.

Compliance with the EU AI Act can become a competitive advantage for European companies in global markets, where "EU compliance" becomes synonymous with quality and reliability. This can lead to a "Brussels Effect" where European standards become de facto global standards, creating significant opportunities for companies that invest early in compliance.

The Competitive Advantage of Compliance: ROI of Responsibility

Organizations that proactively embrace the convergence of AI, regulation, and nutritional science not only mitigate risks but create sustainable competitive advantages that translate into superior financial performance.

Time-to-Market Acceleration

Companies using AI-driven compliance tools report 10 times faster entry into new markets while maintaining full regulatory adherence. This acceleration stems from AI's ability to automate compliance verification processes that traditionally required weeks or months of manual work.

Automation includes automatic checking of formulations against regulatory databases, automatic generation of compliance documentation, automatic verification of health claims, and proactive identification of potential regulatory problems. These processes not only accelerate time-to-market but also reduce the risk of human errors that can lead to costly delays or sanctions.

Leading companies are developing "digital compliance engines" that integrate multiple regulatory sources and can evaluate new product compliance in real-time. These systems represent a significant competitive advantage in markets where innovation speed is crucial and where compliance is increasingly complex.

Consumer Trust Enhancement

The transparency and scientific rigor enabled by compliant AI systems translate directly into greater consumer trust. This is particularly important given that 87% of supplement users support mandatory AI declaration on labels.

Consumer trust translates into multiple positive business metrics, including higher customer lifetime value, reduced customer acquisition costs, enhanced brand loyalty, and premium pricing capability. Companies that invest in AI transparency see these benefits amplified over time, creating virtuous cycles of sustainable growth.

Conclusions: The Strategic Action Imperative

The EU AI Act represents more than a new regulation: it is a catalyst for a fundamental transformation of the nutrition industry toward greater responsibility, transparency, and effectiveness. Organizations that understand this dynamic and act proactively will not only ensure compliance but emerge as leaders in a new industrial paradigm.

The convergence of AI, European regulation, and nutritional innovation creates unprecedented opportunities for visionary companies willing to invest in building responsible and transparent systems. The future belongs to those who can masterfully navigate the intersection of science, technology, and regulation, creating solutions that serve both business imperatives and the public good.

#Nutriai #education #learning #science #nutrition #research #health #wellness #foodscience #scientificliteracy #educationalcontent #evidencebased #nutritionscience #publichealth #scicomm #sciencecommunication #educationalpurpose #knowledgesharing #professionaltraining #healthyeating #foodsafety

Disclaimer: This content is used solely for educational and informational purposes. All texts and images are not owned or claimed by the user. No copyright or proprietary rights are claimed or transferred.

--------------------------------------------------------------------------

1. https://journals.aom.org/doi/10.5465/amr.2018.0072

4. https://www.aalpha.net/blog/human-ai-collaboration-augmenting-capabilities-with-agentic-platforms/

6. https://link.springer.com/10.1007/s00607-023-01181-x

8. https://pmc.ncbi.nlm.nih.gov/articles/PMC11826052/

9. https://pmc.ncbi.nlm.nih.gov/articles/PMC6582554/

10. https://pmc.ncbi.nlm.nih.gov/articles/PMC11624811/

11. https://www.nature.com/articles/s41562-024-02024-1

12. https://www.tandfonline.com/doi/pdf/10.1080/13600834.2021.1958860?needAccess=true

13. https://arxiv.org/pdf/2503.19159.pdf

14. https://www.jmir.org/2024/1/e50130

15. https://www.efsa.europa.eu/en/corporate-pubs/aiefsa

16. https://www.efsa.europa.eu/en/supporting/pub/en-7339

20. https://www.efsa.europa.eu/sites/default/files/2024-03/4-update-on-advisory-group-data.pdf

22. https://www.efsa.europa.eu/en/supporting/pub/en-8223

23. https://bmjopen.bmj.com/lookup/doi/10.1136/bmjopen-2023-072254

24. https://pmc.ncbi.nlm.nih.gov/articles/PMC10335470/

25. https://www.efsa.europa.eu/sites/default/files/event/mb100/Item 04 - doc1 - AAR2024 - mb250327-a2.pdf

26. https://www.distillersr.com/products/distillersr-systematic-review-software

27. https://www.distillersr.com/resources/updates/whats-new-in-distillersr-makeover-time

28. https://pmc.ncbi.nlm.nih.gov/articles/PMC7559198/

30. https://www.efsa.europa.eu/en/supporting/pub/en-9394

31. http://users.ics.forth.gr/~tzitzik/publications/Tzitzikas-2024-EFSA-Ontologies.pdf

32. https://ceur-ws.org/Vol-3415/paper-5.pdf

34. https://www.efsa.europa.eu/sites/default/files/2022-01/amp2325.pdf

35. https://www.efsa.europa.eu/sites/default/files/event/mb93/mb221215-a3.pdf

37. https://www.efsa.europa.eu/en/supporting/pub/en-8567

38. https://pmc.ncbi.nlm.nih.gov/articles/PMC11584592/

39. https://www.efsa.europa.eu/sites/default/files/event/2020/AF200401-p3.4_CH_MH.pdf

40. https://foodsafetyplatform.eu

41. https://pmc.ncbi.nlm.nih.gov/articles/PMC9749438/

42. https://euagenda.eu/publications/download/637218

43. https://science-council.food.gov.uk/Results and Discussion

44. https://www.efsa.europa.eu/en/supporting/pub/en-9654

45. https://dx.plos.org/10.1371/journal.pone.0303083

46. https://www.mdpi.com/1660-4601/18/23/12635/pdf

47. https://www.mdpi.com/2571-9394/6/4/46

48. https://www.efsa.europa.eu/sites/default/files/EFSA_Environmental_Scan_Report_2019.pdf

49. https://www.fao.org/food-safety/news/news-details/en/c/1748997/

50. https://international-animalhealth.com/wp-content/uploads/2024/10/FEED-AND-ADDITIVES.pdf

51. https://www.fao.org/food-safety/news/news-details/ru/c/1743810/

53. https://www.nature.com/articles/s41598-024-59616-0

54. https://www.semanticscholar.org/paper/be0cd07d1d590b7dc7475cd3bb9d01dbf1862db6

55. https://aja.journals.ekb.eg/article_452225.html

56. https://onlinelibrary.wiley.com/doi/10.1002/hfm.70022

57. https://al-kindipublishers.org/index.php/jcsts/article/view/8592

58. https://ieeexplore.ieee.org/document/10653012/

59. https://www.jippublication.com/index.php/jip/article/view/162

60. https://account.theoryandpractice.citizenscienceassociation.org/index.php/up-j-cstp/article/view/738

61. https://ijsrem.com/download/ai-driven-accounting-opportunities-challenges-and-the-road-ahead/

62. https://dx.plos.org/10.1371/journal.pone.0298037

63. https://onlinelibrary.wiley.com/doi/pdfdirect/10.1111/rego.12568

64. https://pmc.ncbi.nlm.nih.gov/articles/PMC11066064/

65. https://arxiv.org/pdf/2402.08466.pdf

66. http://arxiv.org/pdf/2503.02250.pdf

67. https://arxiv.org/html/2410.14353

68. http://arxiv.org/pdf/2403.00148.pdf

69. https://intuitionlabs.ai/articles/ai-future-regulatory-affairs-pharma

70. https://researchswinger.org/publications/aijobs25.pdf

71. https://pubsonline.informs.org/doi/10.1287/mnsc.2024.05684

72. https://www.sciencedirect.com/science/article/pii/S2452414X25000950

73. https://www.mdpi.com/1996-1944/17/21/5285

74. https://journals.sagepub.com/doi/10.1177/23259671221149403

75. https://revistes.ub.edu/index.php/der/article/view/41399

76. https://academic.oup.com/jamia/article/32/6/1071/8126534

77. https://link.springer.com/10.1007/s00217-024-04553-5

78. https://link.springer.com/10.1007/s42979-023-02583-6

79. https://josr-online.biomedcentral.com/articles/10.1186/s13018-024-05234-5

80. https://afju.springeropen.com/articles/10.1186/s12301-024-00464-9

81. https://www.mdpi.com/2305-6290/7/4/80

82. https://arxiv.org/ftp/arxiv/papers/2403/2403.08352.pdf

83. https://zenodo.org/record/8338404/files/RaischKrakowski_AMR_inpress.pdf

84. https://pmc.ncbi.nlm.nih.gov/articles/PMC4100748/

85. https://www.annualreviews.org/content/journals/10.1146/annurev-soc-090523-050708

Contact details

Follow me on LinkedIn

Nutri-AI 2025 - Alessandro Drago. All rights reserved.

e-mail: info@nutri-ai.net